An Audit of 9 Algorithms used by the Dutch Government

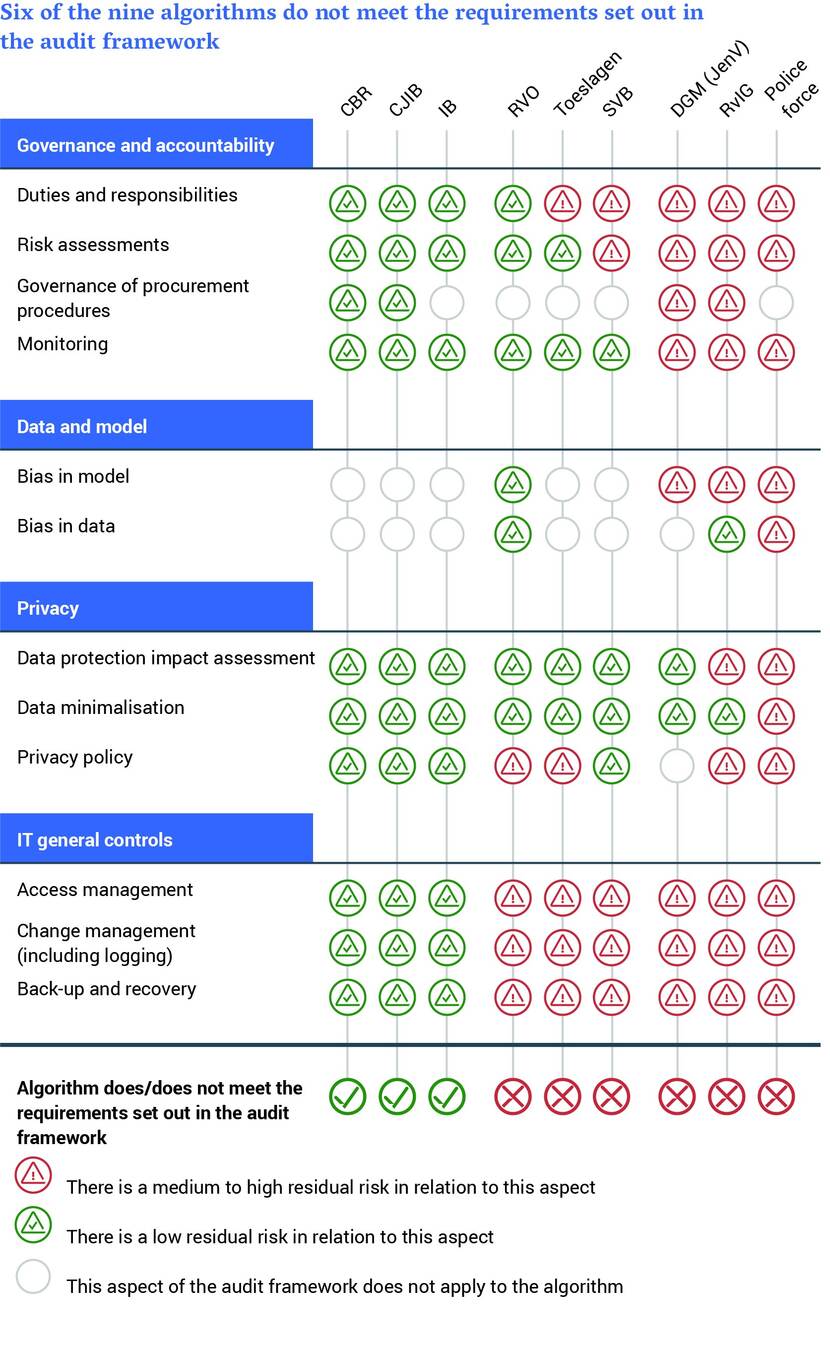

Responsible use of algorithms by government agencies is possible but not always the case in practice. The Netherlands Court of Audit found that 3 out of 9 algorithms it audited met all the basic requirements, but the other 6 did not and exposed the government to various risks: from inadequate control over the algorithm’s performance and impact to bias, data leaks and unauthorised access.

This is Sandra.

Sandra is in the middle of moving into a new home.

And because she is going to rent...

she decides to apply for rent allowance from the government.

Besides Sandra there are thousands of other Dutch citizens...

who make these kinds of applications every day.

In order to make all these applications run faster and more smoothly...

the government makes use of algorithms.

An algorithm is a series of commands that a computer follows step by step.

It can be used for all sorts of purposes.

For example, it automatically links your license plate to your address...

if you are caught, so that the traffic fine arrives at the right address.

But an algorithm can also check whether you are entitled to a pension...

or, as in Sandra's case, whether she is entitled to rent allowance.

That is efficient, but there are also risks involved.

That is why the Netherlands Court of Audit investigates whether algorithms work well...

and whether they are used wisely.

Does the algorithm see that Sandra's application meets all the conditions?

Then the money is paid out automatically.

But is that not the case, or is it unclear then an official reviews her application...

and determines whether Sandra will receive money or not.

This can make it take longer sometimes before a decision is made.

If an algorithm is not used correctly, then a risk of discrimination arises...

or there is a chance that data of citizens or companies is not secure.

In case of careless use, citizens like Sandra, or companies...

may not get what they are entitled to.

The Netherlands Court of Audit therefore makes recommendations...

to ministers and Parliament

so we can exploit the opportunities offered by algorithms

and limit possible adverse effects on citizens and businesses.

Because that is what the Netherlands Court of Audit stands for.

That government policy must be implemented economically, efficiently and effectively

So Sandra and thousands of other Dutch citizens get what they are entitled to.

Want to know more? Go to rekenkamer.nl/algoritmes.

For An Audit of 9 Algorithms used by the Dutch Government, published on 18 May 2022, we carried out a risk analysis to select 9 algorithms used by as many government organisations and assessed their performance. In 2021, the Court of Audit had developed an assessment framework in consultation with many government organisations to prepare for this audit. We asked what requirements an algorithm had in any event to meet. The government did not have such a framework of basic requirements. The audit then assessed 9 algorithms against this framework.

We audited both simple and complex algorithms. Some were supported, such as those that send traffic fines to the right address or check whether aliens have not already registered in the Netherlands. Others took decisions partly automatically, for instance to award housing benefits, decide whether a business was eligible for financial support from the TVL scheme to combat the COVID-19 pandemic and to decide whether an applicant was medically fit to drive a motor vehicle.

No meeting basic requirements

The algorithms we audited that were used by the police, the Ministry of Justice and Security’s Directorate-General for Migration and the National Office for Identity Data did not meet the basic requirements on several counts. The last 2 organisations had outsourced the development and management of algorithms but had not made agreements on who was responsible for what. The National Office for Identity Data could not independently verify that its algorithm correctly assessed the quality of passport photographs. This could lead to discrimination. Furthermore, it did not assess the consequences for data protection. The Criminality Anticipation System used by the police to forecast where and when there is a high risk of incidents does not check for bias.

IT general controls often ineffective

Six of the 9 organisations we audited did not known which members of staff had access to the algorithm and its data. Unauthorised access to systems can lead to data being corrupted or lost, with potentially dire consequences for citizens and businesses. The Netherlands Enterprise Agency (which provides financial support under the government’s TVL scheme), the autonomised Benefits department of the Tax and Customs Administration (which awards housing benefits) and the Social Insurance Bank (which assesses state pension applications) are all exposed to risks because their IT general controls are not in order.

What are our recommendations?

We make several recommendations to the ministers concerned. They are consistent with the recommendations we made in the audit report we published in 2021.

- Particularly where algorithms are outsourced or bought from an external supplier, document agreements on their use and make effective arrangements for monitoring compliance on an ongoing basis.

- Ensure that algorithms and the data required for their operation are protected by effective IT general controls.

Another recommendation concerns the risk of bias in the model or as an outcome of its use. - Check regularly – during both the design and use of algorithms – for bias, in order to prevent any undesirable systemic variations in relation to specific individuals or groups of individuals.

Why did we audit these 9 algorithms?

Algorithms are useful and helpful but not without risk. One of our recommendations is that the ministers concerned prevent undesirable systemic variations by checking for the presence of bias. This will reduce the risk of undesirable outcomes. Without algorithms, however, government policy could not be implemented. Algorithms take millions of decisions, solve millions of problems and make millions of forecasts quickly and automatically every month. Our audit found that taking an algorithm out of use, as the Social Insurance Bank did with its algorithm to assess personal budget applications, can also harbour risks. Civil servants then have to perform more work manually and fewer warnings are received of the potential misuse of a public service. Citizens and businesses must be confident that the government uses algorithms responsibly.

What audit methods did we use?

The audit built on an analysis we had made for our first audit of algorithms (‘Understanding Algorithms’), which we carried out in 2021. We supplemented the analysis with new information from several sources and assessed the use of 9 algorithms against our algorithm assessment framework. The 9 algorithms were selected on several criteria: impact on citizens and businesses, risk (where the risk of irresponsible use of an algorithm is highest) and the use of algorithms in different domains, such as the social domain and the security domain. The selected algorithms had to be in current use. We also selected different types of algorithm.

Current status

The audit findings prompted the State Secretary for Digitisation (of the Ministry of the Interior and Kingdom Relations) to take immediate action, thus demonstrating how seriously she takes the Court’s audit and her responsibility for the digitisation of government. She will work with the implementing organisations to tackle the shortcomings. She wants to inform the House of Representatives of any follow-up measures before the summer recess.

The audit report was published and sent to the House of Representatives and the Senate at the same time as the 2021 Accountability Audit on 18 May 2022. The report has also been posted on the Court of Audit’s website.

Previous relevant audit: Understanding Algorithms (26 January 2021)